Let me show you some data.

I’m going to show you the Match rate and mean Step 1 score for three groups of residency applicants. These are real data, compiled from the NRMP’s Charting Outcomes in the Match reports.

Ready?

- U.S. Allopathic Seniors: 92% match rate; Step 1 232.3

- U.S. Osteopathic Seniors: 83% match rate; Step 1 225.8

- IMGs (both U.S. and non-U.S. citizen: 53% match rate; Step 1 223.6

Now. What do you conclude when you look at these numbers?

__

In the debate over the USMLE’s score reporting policy, there’s one objection that comes up time and time again: that graduates from less-prestigious medical schools (especially IMGs) need a scored USMLE Step 1 to compete in the match with applicants from “top tier” medical schools.

In fact, this concern was recently expressed by the president of the NBME in an article in Academic Medicine (quoted here, with my emphasis added).

“Students and U.S. medical graduates (USMGs) from elite medical schools may feel that their school’s reputation assures their successful competition in the residency application process, and thus may perceive no benefit from USMLE scores. However, USMGs from the newest medical schools or schools that do not rank highly across various indices may feel that they cannot rely upon their school’s reputation, and have expressed concern in various settings that they could be disadvantaged if forced to compete without a quantitative Step 1 score. This concern may apply even more for graduates of international medical schools (IMGs) that are lesser known, regardless of any quality indicator.”

The funny thing is, when I look at the data above, I’m not sure why we would conclude that IMGs are gaining advantage from a scored Step 1. In fact, we might conclude just the opposite – that a scored Step 1 is a key reason why IMGs have a lower match rate.

So let’s consider this objection further. In this post, I’d like to answer two questions.

- Does a scored USMLE ‘level the playing field’ for lower-tier USMGs/IMGs – or does it simply perpetuate disadvantage?

- And regardless of the answer to #1 above, does reporting USMLE scores make sense from a policy standpoint?

__

Some background

It’s undeniable that Step 1 scores matter in residency selection. Here, just for example, are USMLE Step 1 scores by match status and specialty for U.S. seniors, from the most recent NRMP report.

__

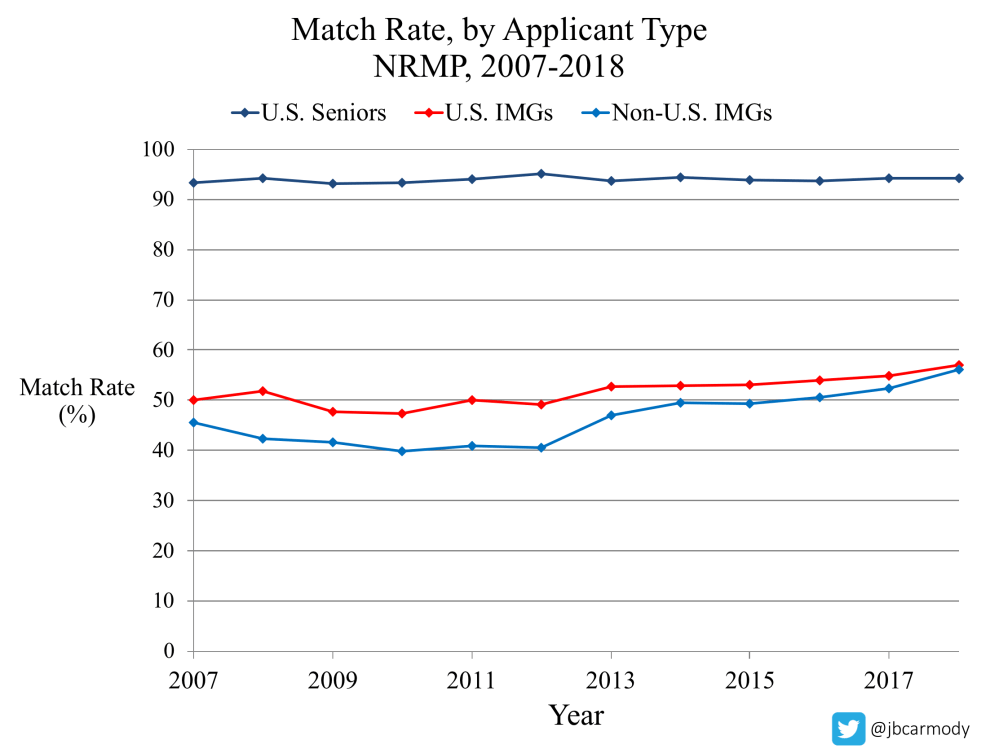

And it’s also true that IMGs face an uphill battle in the Match.

But is this a level playing field?

But in light of the data we started with above, there’s a problem with the “level playing field” argument: students at the top U.S. medical schools do quite well on Step 1.

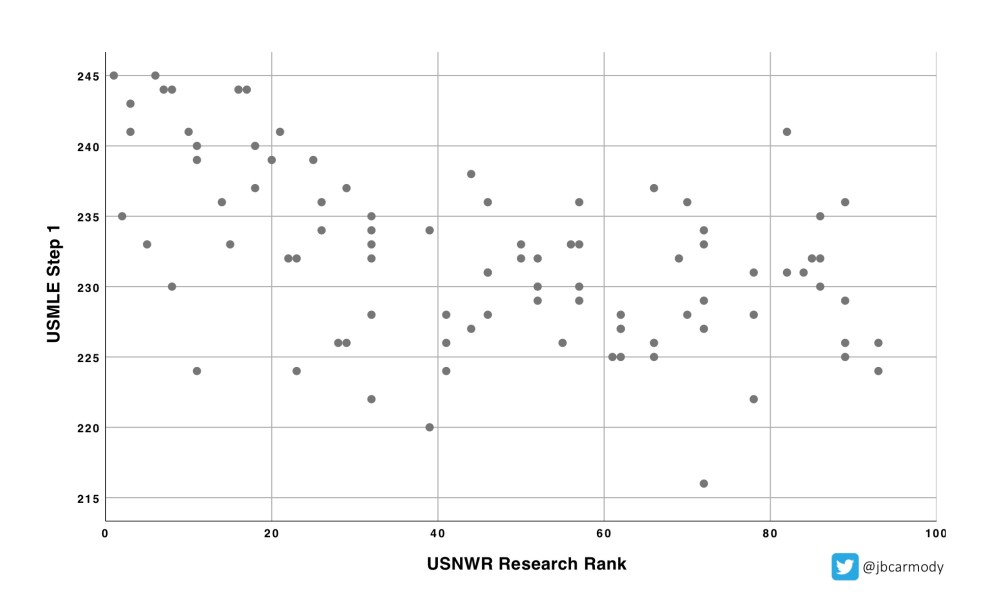

Here is a scatterplot of the mean Step 1 score by medical school, ordered by the 2019 U.S. News & World Report “Best Medical Schools” research rankings.

As you can see, students at the best schools (at least, the “best” schools according to USNWR rankings) have the best USMLE Step 1 scores: look at the clustering of scores in the upper left quadrant.

Students at the ‘best’ medical schools also have the best MCAT scores

Many students at less prestigious allopathic schools, osteopathic schools, and Caribbean medical schools most differ from their colleagues at the “top” allopathic schools in one area: MCAT scores.

And there is a correlation between other standardized test scores and the USMLE Step 1.

Could it be that Step 1 scores simply carry forward an advantage already set in motion by earlier standardized tests? Are we just measuring the same thing over and over again?

To demonstrate the correlation between these tests, I also plotted the mean MCAT and USMLE Step 1 score for schools who reported their data for the 2019 U.S. News & World Report “Best Medical Schools” rankings.

Some notes on methods appear below, but I take two conclusions from these data.

- There is a strong linear relationship between the mean MCAT and mean USMLE Step 1 score by school. Every 1 point increase in MCAT is associated with a 1.08 point increase in USMLE Step 1 (95% CI: 0.89-1.28; p<.001).

- There aren’t a whole lot of schools that dramatically “overperform” or “underperform” this simple model. The plot of standardized residuals using the above regression equation fits a bell curve distribution. Five schools did have large positive or negative standardized residuals (MCAT/USMLE Step 1/standardized residual): University of Missouri (508/241/+3.30), University of Texas-Galveston (507/237/+2.60), Mayo (516/245/+2.17), New Mexico (505/216/-1.95), UC-Davis (510/220/-2.29). Overall, 55% of the variation in mean USMLE Step 1 scores by school are explained by differences in the MCAT score alone (R2=0.55).

___

The bottom line

When I look at the scatterplots above, I conclude that, contrary to the assertions of Drs. Katsufrakis and Chaudhry above, it doesn’t appear that that students at elite medical schools “feel that their school’s reputation assures their successful competition” in residency selection. They seem to be doing quite well on Step 1, thank you very much.

So if the “best” schools have the highest Step 1 scores…why do we not look at these data and conclude the the residency selection advantage gained by graduates of “elite medical schools” is BECAUSE of their Step 1 scores, not in spite of them?

Does the use of Step 1 scores in residency selection “level the playing field”? Or would disadvantaged candidates be better off if we played a different game altogether?

___

Acknowledging the obvious objection

I know there are many students out there who will reject this logic. I’ve already heard from many of them on Twitter.

The issue is, of course, is that even though USMLE Step 1 scores disadvantage IMGs and ‘lower-tier’ USMGs on a systemic level, they offer an individual applicant the hope of catching a program director’s eye.

Listen, I get it. Step 1 is the devil we know, and there are many students out there who would rather “compete” using their Step 1 score than with some other metric. Furthermore, if we just got rid of Step 1 scores and provided nothing new to replace them, students at top-tier medical schools would still enjoy an advantage. (If we simply shift focus to other existing areas of the application, who do you think has better opportunities for research, big name letter writers, etc.?)

But let me make three points in rebuttal.

1. A pass/fail USMLE is a means to an end.

I don’t support a pass/fail USMLE because I think that evaluating candidates using other existing metrics is better. I support it because our idolatry of Step 1 scores in residency selection keeps us from critically evaluating the whole process and working to measure things that matter and truly match candidates with the best program based on both aptitude and goodness of fit.

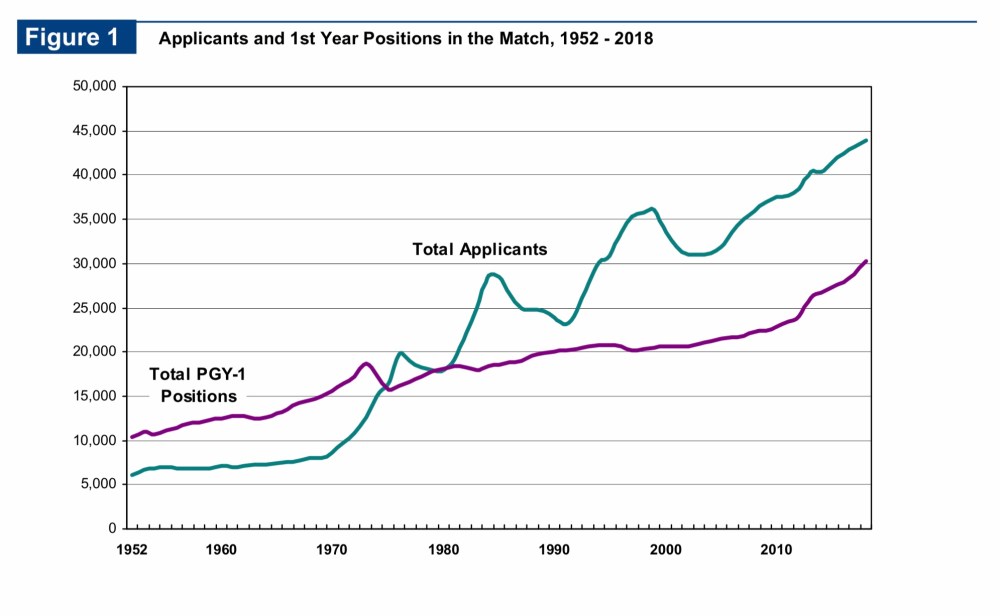

2. Score reporting policy doesn’t change the basic math of the Match.

For the past 25+ years, there has been a significant mismatch between the number of residency positions and the number of applicants.

How we choose to score Step 1 does nothing to this basic math. And yet, often we talk about it like it does. Not long ago, I debated a faculty member on Twitter who claimed that with no Step 1 scores, most residency programs would simply stop interviewing IMGs, because that would be the “simplest metric” that could be used.

Is that argument supported by data? Or is it just fearmongering?

We’re still going to have the same number of spots – and U.S. allopathic graduates can’t fill all of them. (So far as I know, Harvard and Johns Hopkins aren’t planning to increase their entering class size ten-fold if Step 1 is reported pass/fail.)

Sure, programs who currently match IMGs could certainly choose to stop interviewing them. If they don’t care if their program goes unmatched, that is.

Remember, many programs do not interview or rank IMGs as it is: considering all programs in all specialties, only 42% do, according to the 2018 NRMP Residency Program Directors’ Survey.

But that figure varies widely based on the competitiveness of the specialty. For instance, 90% of pathology programs consider non-U.S. IMGs, while only 6% of orthopedic surgery programs do. In fact, the more competitive the specialty is for U.S. seniors, the fewer programs there are who interview/rank IMGs.

The competitiveness of the Match process is driven by basic math – how many candidates, and how many positions in the field. How the USMLE reports its results doesn’t change that one bit. In my opinion, programs who find value in training IMGs (or who need IMGs to fill their positions) will still choose to interview and rank IMGs, regardless of the evaluation methods available. In fact, if we had more meaningful metrics – metrics that actually predicted residency success, unlike the USMLE – more programs might be willing to consider IMGs. So why not choose methods that are more meaningful?

3. Weighting individual payoffs over societal payoffs is bad policy.

If you consider residency selection policy only from the standpoint of individual applicants, it’s a zero sum game. There are a fixed number of residency spots, and any metric we use to select candidates will benefit one applicant at the expense of another. Use Step 1 scores, or use something else… but if there are two candidates and only one spot, one candidate wins and the other loses.

However, considering the payoffs only to individual applicants takes an unnecessarily narrow view of residency selection policy. What we should really be asking ourselves is, to the extent that the end product of medical education is a public good, are we selecting residents in a way that leads to increased good for society?

I say we aren’t. I don’t think there is any value to students or their future patients by spending hours memorizing soon-forgotten basic science minutiae for the USMLE Step 1.

Even if it is true that our best students have the highest scores on Step 1 – is that really how we want them to spend their energy and talent? If students have to compete for residency positions, then let’s at least have them compete in an endeavor that makes them all better doctors.

Listen, I took USMLE Step 1 in 2005. On a personal level, I don’t have anything to gain from USMLE scores being reported as a three-digit number, a ‘P’ or ‘F’, or anything else. I’ve gotten interested in this issue because I see the excessive focus on USMLE Step 1 as something that’s harming the quality of both undergraduate and graduate medical education.

I think that sucks – and I think we can do better.

You might also like:

A Field Guide to USMLE Step 1 Apologists

Notes

To ensure transparency in the analyses above, a couple of comments on the methods:

-

Some have asked why I didn’t report regression statistics for the first scatterplot (on Step 1 scores and USNWR ranking). I’m not trying to hide anything – I chose not to do a linear regression for a couple of reasons. For one thing, the data on the x axis are ordinal. (That is, it’s not clear what 1 unit measures mathematically, or if the distance between ranks 1 and 2 is the same as the distance between ranks 53 and 54.). For another, the relationship between USNWR ranking and Step 1 score is not exactly an independent one – the test scores factor into the school’s USNWR rank. Nonetheless, the USNWR rankings are a simple way to capture the way we think about which medical schools are the most prestigious – and to me, the scatterplot alone is sufficient to demonstrate that students at these medical schools score the highest on Step 1.

-

The data points in the MCAT/USMLE scatterplot above are for schools, not individuals. However, the correlation between (old) MCAT and USMLE scores for individuals is good.

-

These graphs consider only allopathic schools, since many students at osteopathic schools may pursue an alternative licensure pathway (COMLEX).

-

There are 144 allopathic medical schools in the U.S. Not all of these schools provided data to USNWR. Thus, the plot above includes data points for only 67% (97/144) allopathic schools.

-

It seems likely that schools who choose to not report their data to USNWR seem do so non-randomly – i.e., those with lower MCAT/Step 1 scores are less likely to report their data than schools with higher scores. My suspicion is that these schools would have points in the lower left quadrant of the MCAT/USMLE scatterplot above – but it is possible that the relationship between MCAT and USMLE Step 1 scores is different for schools who did not report their data.

-

The USNWR Step 1 data are reported by the individual schools (i.e., not obtained independently from the NBME, for instance). There may differences in ways different schools calculate their mean score (i.e., which students are included).

-

I did remove one outlier: Wright State, which reported a mean USMLE Step 1 of 260 (?!?). If anyone out there is privy to data suggesting that is an accurate figure, I’d be happy to re-reun the regression.

-

Also, if you’re like me, and you remember the MCAT being three sections graded up to 15 points, the correlation for “old” MCAT scores is essentially the same. Only 91 allopathic schools reported these data, but the model performed similarly (slope 1.62; 95% CI: 1.27-1.98; R2=0.48).

-

Lastly, just like always, if you want to check my figures, please do. The data are available from U.S. News & World Report. And if you find a mistake, please let me know. You don’t have to agree with my analysis or conclusions – but the data should be honestly presented.